I wanted to test out very basic training using a standard library across both Nvidia and Mac and thought I’d give Axolotl a shot since it works(technically) on Mac. The install on Mac is pretty straight forward:

conda env remove --name axolotl

conda create -n axolotl python=3.11 -y

conda activate axolotl

git clone https://github.com/OpenAccess-AI-Collective/axolotl

cd axolotl

pip install torch

pip install -e '.'Though it requires a downgrade to 0.42.0 to bitsandbytes since 0.43.0 isn’t on the platform yet.

In this test case for the trains I’ll be using this simple example:

CUDA_VISIBLE_DEVICES="" python -m axolotl.cli.preprocess examples/tiny-llama/lora-mps.yml

time accelerate launch -m axolotl.cli.train examples/tiny-llama/lora-mps.ymlThis LoRA config doesn’t use flash attention which isn’t available on Mac.

Test Results

The test results heavily favor Nvidia, by a long shot.

Dual Nividia 3090

On dual 3090’s we finish in under 4 minutes with 9.2 seconds per iteration. The real up side on this is that data parallelism is in effect taking the trains down to 24 from 52 on our other setups.

real 4m11.428s

user 7m52.010s

sys 0m4.338s

12/24 [01:49<01:50, 9.20s/it]

24/24 [03:43<00:00, 9.31s/it]Single Nvidia 4090

I swapped my Stable Diffusion setup for a test Axolotl install to see how much of a difference a 4090 would make. This finished in around 4 mins 17 seconds which is still quite good.

real 4m34.662s

user 4m26.604s

sys 0m3.565s

13/52 [01:04<03:12, 4.93s/it]

28/52 [02:18<01:55, 4.81s/it]

42/52 [03:27<00:48, 4.82s/it]

52/52 [04:17<00:00, 4.94s/it] Mac M1 Ultra

This took awhile. The good news is that it, well, does work. But it took a whopping 45 minutes, about 10 times longer. Clearly this is a task you’ll need to use specialized libs for, like MLX, or just rent GPU.

real 45m28.174s

user 1m56.735s

sys 2m31.239s

9/52 [07:51<37:31, 52.35s/it]

28/52 [24:24<20:30, 51.28s/it]

42/52 [36:28<08:23, 50.30s/it]

52/52 [45:12<00:00, 52.16s/it]Power Usage

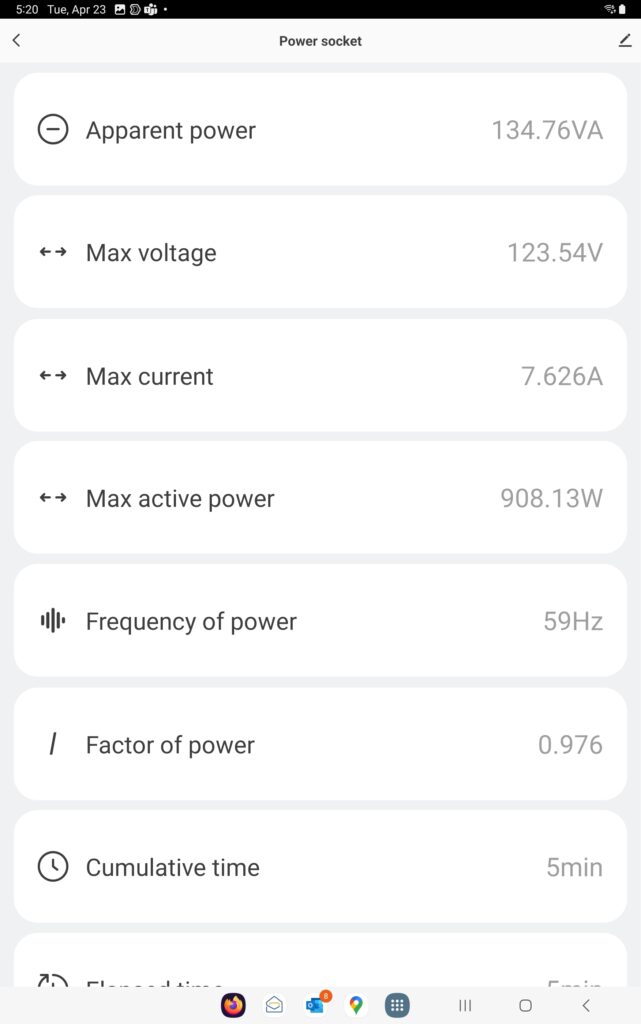

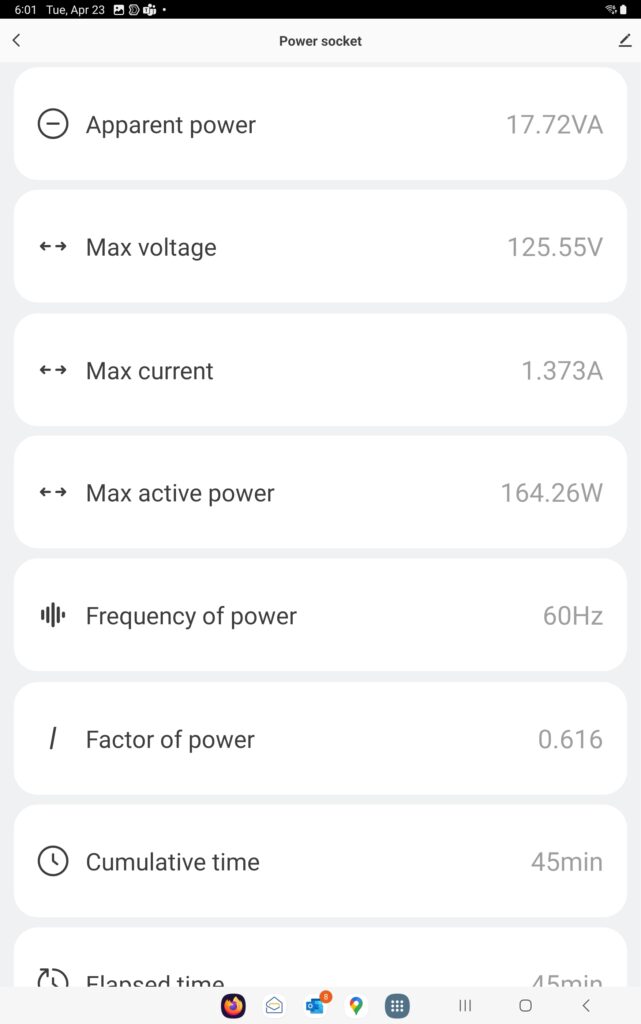

On the dual Nvidia 3090 the machine was sucking down more power than it even uses for inference. Peak power usage was over 900 watts. The Mac peaked at 160 watts, which is really good. But total power usage on Nvidia was less than the Mac because it was so much faster.

Dual 3090 power usage:

M1 Ultra power usage:

Clear winner: Nvidia

Axolotl is not a highly optimized training library for Nvidia. It’s easy to use and has broad support for a lot of AI models. Something like Unsloth would likely show even more preference towards using Nvidia in training. I’m interested in seeing how well MLX does, but its model support is pretty narrow and it prefers different parameter options in fine tuning which I’ll need to translate to make the test comparable to the above.