I have two dual Nvidia 3090 Linux servers for inference and they’ve worked very well for running large language models. 48GB of VRAM will load models up to 70B at a 5.0bpw quant using EXL2 with a 32k 4bit context cache and run at 15 tokens per second pretty reliably.

The issue I have is that I keep getting Indiana AEP power bill alerts.

Geez. I wonder what could be causing that? But a further problem is that 48GB of VRAM isn’t quite enough for the newer, larger open models. Mixtral 8x22b would only really load at a 2.5bpw quant size and Command R Plus will fit at around 3.0bpw.

The perplexity loss at these quant levels isn’t great.

WizardLM-2-8x22B Perplexity

| Quant Level | Perplexity Score |

|---|---|

| 7.0 | 4.5859 |

| 6.0 | 4.6252 |

| 5.5 | 4.6493 |

| 5.0 | 4.6937 |

| 4.5 | 4.8029 |

| 4.0 | 4.9372 |

| 3.5 | 5.1336 |

| 3.25 | 5.3636 |

| 3.0 | 5.5468 |

| 2.75 | 5.8255 |

| 2.5 | 6.3362 |

| 2.25 | 7.7763 |

Command R Plus Perplexity

| Quant Level | Perplexity Score |

|---|---|

| 6.0 | 4.7068 |

| 5.5 | 4.7136 |

| 5.0 | 4.7309 |

| 4.5 | 4.8111 |

| 4.25 | 4.8292 |

| 4.0 | 4.8603 |

| 3.75 | 4.9112 |

| 3.5 | 4.9592 |

| 3.25 | 5.0631 |

| 3.0 | 5.2050 |

| 2.75 | 5.3820 |

| 2.5 | 5.6681 |

| 2.25 | 5.9769 |

Ideally a 5.0bpw quant or above tends to show the least amount of loss.

Going Beyond 48GB VRAM

With the release and improvements in MLX, Apple Silicon Macs have been getting good reviews lately. The M1 version of the Ultras have been around for awhile, are available used and have good benchmarks at the 128GB 64-core GPU version.

The used price is also around the same as a base dual 3090 server build, which makes it an interesting test case between the two options.

With 128GB of RAM(115GB usable on the GPU) it can load the newer, larger models at a decent quant size without an issue. An upgraded Nvidia alternative would be to go with a pair of last generation A6000’s to replace the dual 3090’s. This would cost around $8k just for the cards alone, and trying to go quad 3090 in a server wasn’t going to help my power bill any and gets hackneyed real fast. Aside from which, I’m already close to maxing out two 110v 15amp circuits in my basement with 3 AI servers, a Proxmox server, and 2 NAS servers.

Feeling the Speed Difference

After buying and setting up the Mac I was able to run a side by side comparison of inference. I choose to stick with GGUF format for now to keep the comparison apples to apples. Later, when I get used to the MLX ecosystem I can compare EXL2 vs MLX.

But this is what Llama-3-70B-Instruct-Q4_K_M at 8k context feels like across both systems.

There is a speed difference, but not a lot.

A Big Difference in Wattage

But the amount of power draw on both systems was very noticeable.

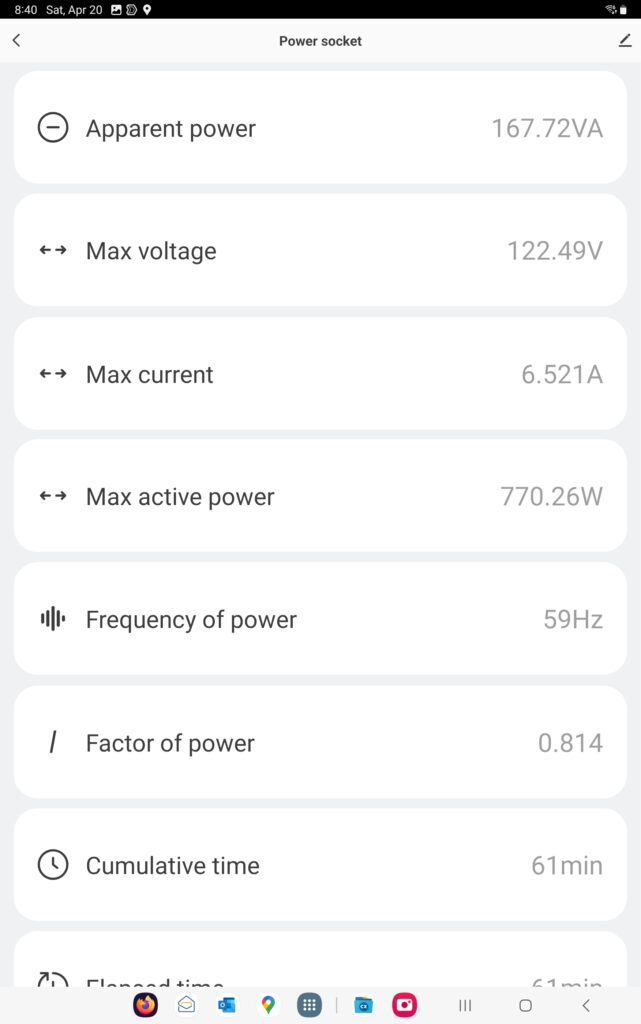

Nvidia Dual 3090 Power Usage

For my Nvidia server, idle power usage was around 125 watts.

Power draw during inference, two bots chatting with each other in Silly Tavern, was around 730-750 watts.

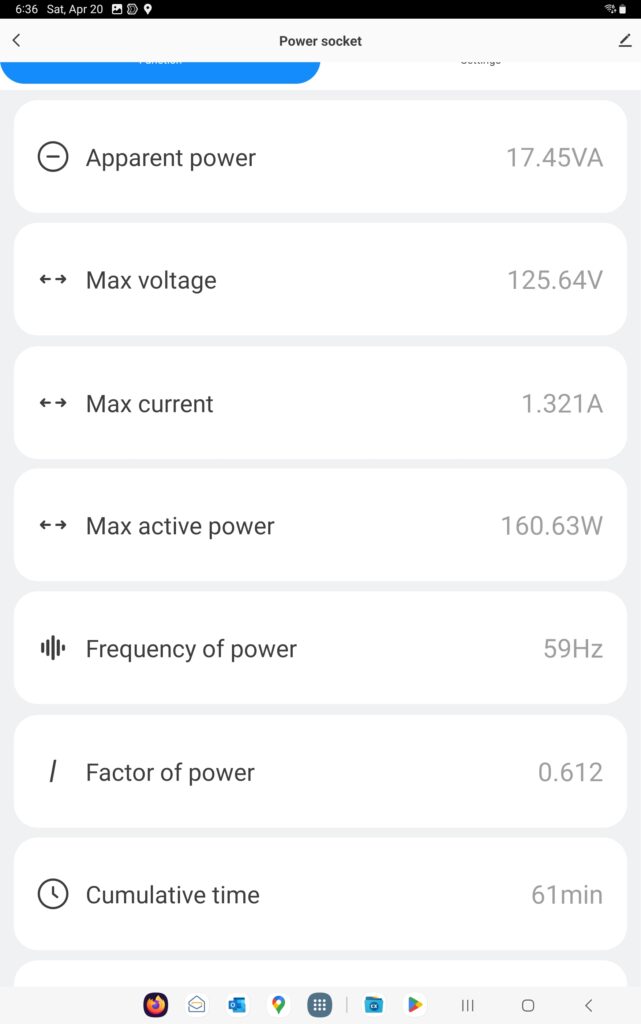

Mac M1 Ultra Power Usage

On the Mac, idle power draw was under 10 watts. This was with the power suspend/screen blanking options turned off since I use this as a headless server and don’t want it to go into sleep mode.

Power draw during inference, two bots chatting with each other in Silly Tavern, was around 145-155 watts.

One Hour Total Power Draw Testing

I left both machines running for a full hour to test the total power draw for an hour of full inference. Bots in a group chat were set to trigger in Silly Tavern after 1 second, so there was basically no time where the machines would be at rest.

1 hour totals – Dual Nvidia 3090

Total power draw was 0.632Kwh over the course of 1 hour.

1 hour totals – Mac M1 Ultra

Total power draw was 0.124Kwh over the course of 1 hour.

Winner: Mac

So the Mac is over 5x more efficient, power-wise, for running single threaded inference. When doing full inference it uses slightly more power than my Nvidia servers do while completely idle. And while idle the Mac sips less than 10 watts of power.

It does this while costing about the same as a dual 3090 server build, doesn’t have the 110v 15amp circuit maxing out worries, is a lot easier to rack, is quiet and doesn’t require self building/debugging motherboard options/NVLink issues.

It gives you 115GB of RAM vs 48GB, so you can load larger models or higher quant 70B models. It is a bit slower, but still quite usable at the 70B level. Features like MLX and how well it shapes up for training still need to be tested, as does other inference work loads(RAG with large fresh context loading is an interest). It also isn’t going to be very good with Stable Diffusion according to forum posts. A single 4090 server build works best for Stable Diffusion, though I’m curious about the power draw on that setup.